The field of natural language processing (NLP) has witnessed significant advancements in the development of language models. OpenAI Whisper and GPT models are among the most sophisticated models that have revolutionized the way we process and analyze human language. One of the most promising applications of these models is in the area of audio transcription and summarization. With the rise of podcasting, video content, and online meetings, there is a growing need for accurate and efficient transcription and summarization of audio data. This type of functionality is especially useful in healthcare, where doctors can benefit from tools that accurately transcribe their audio conversations with patients into text and then summarize key points and actionable items from this text.

The following blog will explore how artificial intelligence can aid doctor-patient consultations. It will then provide a step-by-step guide showing you how to integrate OpenAI Whisper and ChatGPT into your app to provide AI-enhanced real-time communication for healthcare.

Note in regards to AI-powered healthcare solutions: If you require HIPAA compliance for your medical app, you should not use OpenAI’s general API to process HIPAA regulated data (transcripts). Instead, you should ensure that you use a service that offers HIPAA compliance. Current options include the Microsoft Azure Cognitive Services OpenAI API, others may also offer HIPAA compliant transcription services.

Using AI in Healthcare

There are many use cases for artificial intelligence in Medicine. For example, machine learning systems can be used for detecting diseases from images and analyzing patient symptoms. Deep learning systems can help doctors to predict and prevent diseases and infections. Medical AI can also be used for automating processes such as treatment planning, patient intake, and so on.

One other exciting healthcare AI use case is the summation of medical notes and communications between doctors and patients. Just recently Nuance Communications, a clinical documentation software company owned by Microsoft, announced that they are adding OpenAI’s ChatGPT successor GPT-4 to its latest application. The company stated that their new app can summarize and enter conversations between clinicians and patients directly into electronic health record systems using OpenAI’s GPT-4.

Adding audio transcription using ChatGPT to healthcare apps can offer several benefits to healthcare providers and patients.

Firstly, audio transcription can make it easier for healthcare professionals to document patient consultations, procedures, and medical histories. With accurate transcriptions of audio recordings, healthcare providers can easily review and analyze important information from patient visits, improving the accuracy and efficiency of medical documentation.

Secondly, audio transcription can improve the accessibility of healthcare services. Patients who are deaf or hard of hearing, or who speak a different language, may have difficulty communicating with healthcare providers. By providing audio transcriptions of medical consultations, patients can review the information at their own pace, in a format that is accessible to them.

Thirdly, audio transcription can support medical research and analysis. By transcribing audio recordings of medical consultations and procedures, researchers can analyze patient data in new ways, identifying patterns and trends that may not have been apparent before. This can lead to new insights and discoveries in medical research.

Building Audio Transcription & Summarization Tools Using OpenAI

The OpenAI API is a platform that provides developers access to OpenAI’s artificial intelligence models, including Whisper and ChatGPT, both released in late 2022.

Whisper is an automatic speech recognition technology that provides the basis for OpenAI’s transcription service. OpenAI Transcriptions can automatically transcribe audio and video files into written text. This transcription API can accurately recognize speech patterns, even in noisy or difficult-to-understand recordings. In addition to audio transcription, it offers speech recognition, speaker identification, and text-to-speech.

ChatGPT is a powerful language model that has been trained on an enormous amount of text data, allowing it to generate human-like text. It has a variety of uses, but pertinent to this topic it can be used to automatically summarize long pieces of text, distilling the key points into a shorter summary, and converting complex ideas into simpler concepts.

So how exactly can we use these models to enhance doctor-patient communication? For the rest of this article, we will show you how to integrate the functionality of OpenAI Whisper and ChatGPT into your application to build a sophisticated tool that can achieve the following:

- Transcribe an audio file of the doctor’s consultation with a patient.

- Create a summary report of the doctor’s consultation based on the transcription.

- Create a more refined set of meeting notes, based on the summary text.

- Distill a set of action points, or doctor’s recommendations, based on the meeting notes.

For this project will be be using the GPT-3 model.

Please note, the code samples from the article can be downloaded on github

https://github.com/QuickBlox/examples/tree/main/Articles

Let’s get started!

Part 1: Initial Set-up

1. Create the project

Create a Node.js Project Directory which stores your project.

Initialize the Project

You can initialize the project by running the npm init command. This command will generate a package.json file in the project directory.

mkdir sample

cd sample

npm init -y

Create file index.js for your application code.

Install Dependencies

Next, we need to install any necessary dependencies:

- Axios is a Node.js package that provides an easy-to-use API for making HTTP requests.

- form-data a module that can be used to build request objects which contain key-value pairs and files to be sent with HTTP requests.

Run the command to install dependencies in the project:

npm i axios form-data

In the index.js file, include the installed modules:

const axios = require('axios');

const FormData = require('form-data');

Let’s add a main method to start our application:

const main = () => {

console.log('run app...');

}

main();

Add an example mp3 file (example01.mp3) to the root directory of our project.

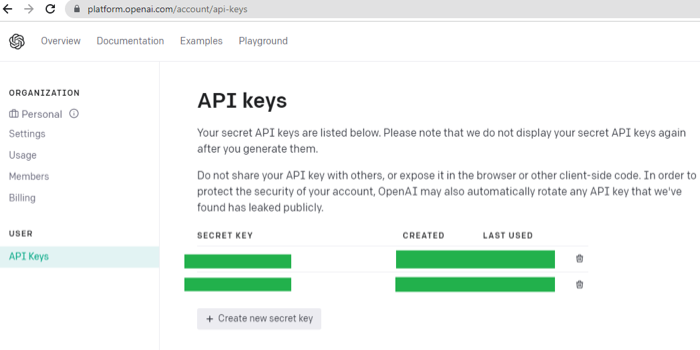

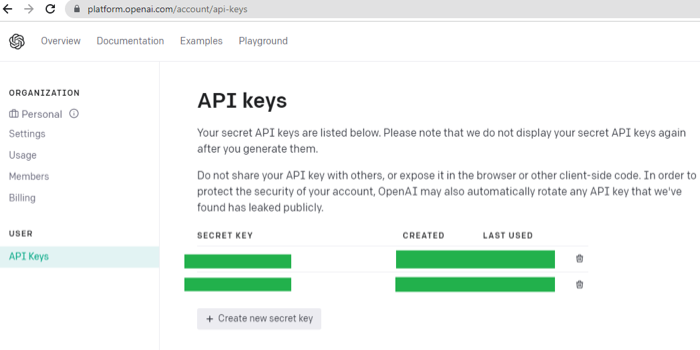

4. Register for an OpenAI account and get an API Key.

To get keys for OpenAI, you first need to create a free account on the OpenAI website. After creating an account, you will be provided with API keys that you can use to access OpenAI.

Add the SECRET_KEY constant to the index.js code and set the value you got in the Keys API section:

const SECRET_KEY = '<YOUR KEY>';

Part 2: Connect App to OpenAI Services

Now we’re ready to add the functionality to the app provided by OpenAI to enable healthcare professionals to transcribe and summarize their verbal conversations with patients. As a reminder we intend to enable the following capabilities:

- Transcribe an audio file into text.

- Summarize the text

- Create meeting notes from the summary text

- Create action points from the meeting notes

1. Connecting the Application to OpenAI Transcription Service

To create transcription functionality we need to connect our application to OpenAI’s transcription API. Documentation for this service can be found here.

Transcribe the Audio File

Let’s connect our app to Whisper and add this function to our program code:

const getTranscription = async (fileName) => {

const audioFile = await fs.readFile(fileName);

const form = new FormData();

form.append('file', audioFile, fileName);

form.append('model', 'whisper-1');

const response =

await axios.post(

'https://api.openai.com/v1/audio/transcriptions',

form,

{

headers: {

Authorization: `Bearer ${SECRET_KEY}`,

},

});

return response.data.text;

};

Let’s add a call to it in the main function:

const main = async () => {

console.log('run app...');

console.log("\n==============\n Transcription:");

const transcriptionText = await getTranscription('example01.mp3');

console.log(transcriptionText);

}

Next, we need to run our application in the terminal:

node index

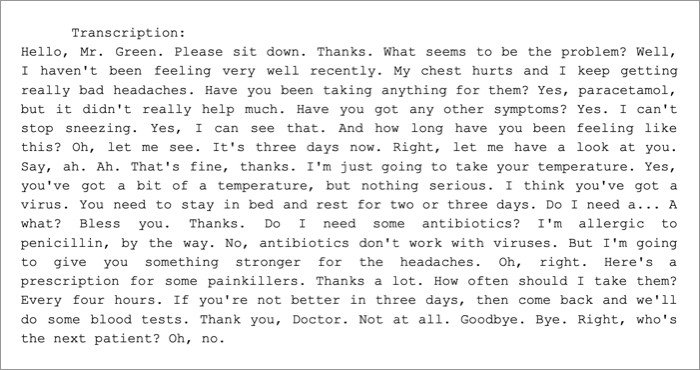

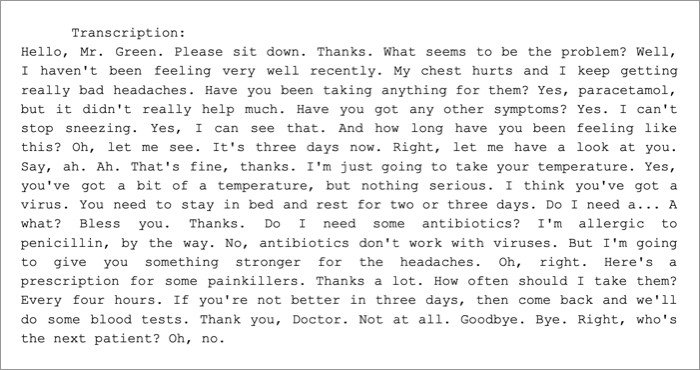

Great! By processing the audio file we will achieve the following transcription:

Connecting the Application to OpenAI GPT-3

Next we’re going to connect our application to OpenAI’s GPT-3 so that we can add summation functionality to our app. You can learn more about this service here.

Create Summary of Transcription

Let’s connect connect ChatGPT to our application and create a summary of the meeting by adding this function to our program code:

const getSummary = async (text) => {

const commandText =

"Convert my short hand into a first-hand account of the meeting::\n\n";

const body = {

prompt: `${commandText} ${text}`,

model: 'text-davinci-003',

temperature: 0,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

const response = await axios.post(

'https://api.openai.com/v1/completions',

body,

{

headers: {

Authorization: `Bearer ${SECRET_KEY}`,

},

});

return response.data.choices[0].text;

}

Let’s add a call to the function getting summary to the main function of the project:

const main = async () => {

console.log('run app...');

console.log("\n==============\n Transcription:");

const transcriptionText = await getTranscription('example01.mp3');

console.log(transcriptionText);

console.log("\n==============\n Summary:");

const summaryText = await getSummary(transcriptionText);

console.log(summaryText);

}

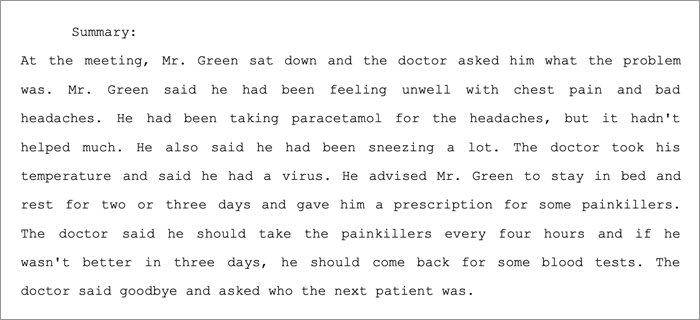

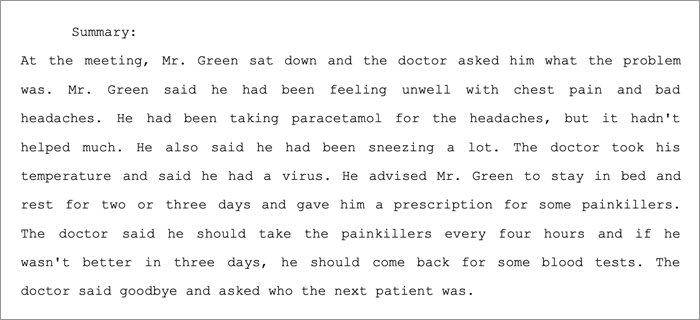

By executing the code we get the following result:

Create Meeting Notes

We can also use GPT-3 to create meeting notes from the summary by adding the following code.

const getNotes = async (text) => {

const commandText = "Create meeting notes:\n\n";

const body = {

prompt: `${commandText} ${text}`,

model: 'text-davinci-003',

temperature: 0,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

const response = await axios.post(

'https://api.openai.com/v1/completions',

body,

{

headers: {

Authorization: `Bearer ${SECRET_KEY}`,

},

}

);

return response.data.choices[0].text;

}

Let’s change the main function:

const main = async () => {

console.log('run app...');

console.log("\n==============\n Transcription:");

const transcriptionText = await getTranscription('example01.mp3');

console.log(transcriptionText);

console.log("\n==============\n Summary:");

const summaryText = await getSummary(transcriptionText);

console.log(summaryText);

console.log("\n==============\n Notes:");

const notesText = await getNotes(summaryText);

console.log(notesText);

}

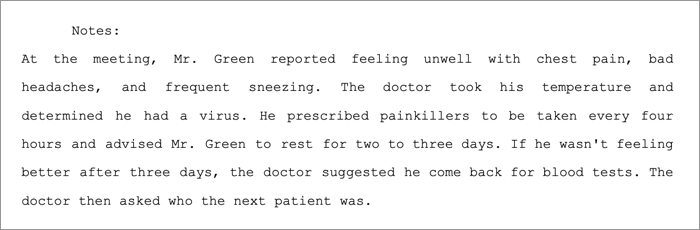

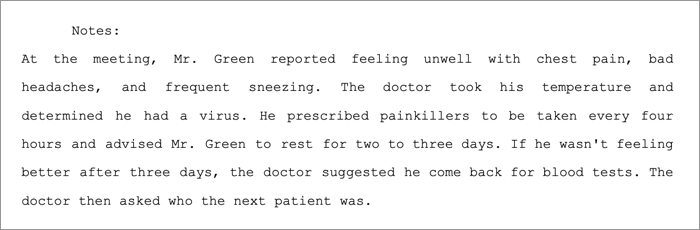

Result of code execution:

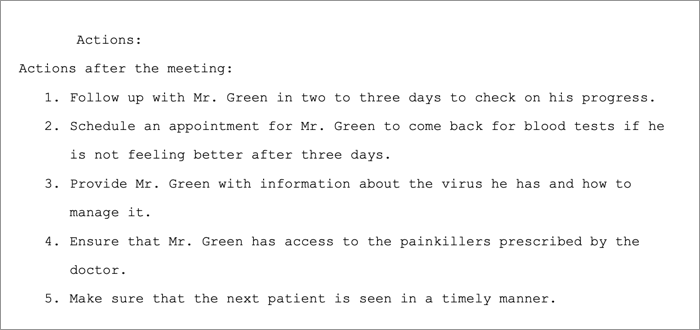

Create Action Points

Finally, we can also add code to prompt GPT-3 to generate action points from the meeting notes.

const getActions = async (text) => {

const commandText = "Create actions after the meeting:\n\n";

const body = {

prompt: `${commandText} ${text}`,

model: 'text-davinci-003',

temperature: 0,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

const response = await axios.post(

'https://api.openai.com/v1/completions',

body,

{

headers: {

Authorization: `Bearer ${SECRET_KEY}`,

},

}

);

return response.data.choices[0].text;

}

main function:

const main = async () => {

console.log('run app...');

console.log("\n==============\n Transcription:");

const transcriptionText = await getTranscription('example01.mp3');

console.log(transcriptionText);

console.log("\n==============\n Summary:");

const summaryText = await getSummary(transcriptionText);

console.log(summaryText);

console.log("\n==============\n Notes:");

const notesText = await getNotes(summaryText);

console.log(notesText);

console.log("\n==============\n Actions:");

const actionsText = await getActions(notesText);

console.log(actionsText);

}

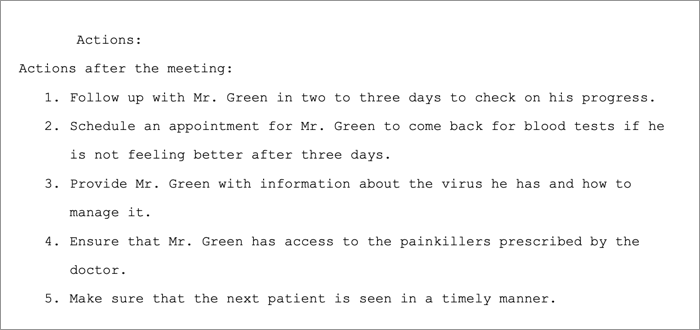

By executing this code we get a succinct list of relevant action points, which is an extremely useful feature for medical professionals.

Summary

Healthcare apps generally lack the ability to effectively process and summarize large amounts of medical data. This is where advanced speech-to-text and summarization technology, such as OpenAI Whisper and GPT, can provide a solution. By implementing these technologies, healthcare apps can provide more accurate and personalized medical information to users, improving their overall experience and increasing their ability to manage their health effectively.

This tutorial showed you how to integrate OpenAI services to transcribe an audio file into text and then summarize the text. Of course this functionality is not limited just to healthcare apps, as there are a wide variety of use cases, including education, legal consultancy, and finance which can benefit from this AI technology as well.

If you are interested in building AI-enhanced chat apps, speak with our team at QuickBlox. We are a communication solution provider, offering SDKs, APIs and customizable plugins, including our video consultation app. Find out more about our AI enhanced Q-Consultation app.

Have Questions? Need Support?

Join the QuickBlox Developer Discord Community, where you can share ideas, learn about our software, & get support.

Join QuickBlox Discord

Great article! It's fascinating to see how OpenAI's Whisper and ChatGPT are transforming healthcare apps. The incorporation of natural language processing and AI capabilities enables faster and more accurate patient communication, enhancing the overall healthcare experience. Keep up the great work!

Great article! The integration of OpenAI Whisper and ChatGPT in healthcare apps is truly revolutionary. The advancements in natural language processing will greatly enhance patient-doctor communication and streamline healthcare services. With this technology, healthcare apps will become even more accessible, efficient, and user-friendly, benefiting both patients and healthcare providers. Kudos to QuickBlox for bringing these cutting-edge solutions to the healthcare industry. Looking forward to seeing the positive impact it will have on healthcare delivery. Keep up the great work!

We agree. Adding AI to communication apps is truly revolutionary! Watch out for more blogs on this topic.